3D Aware Region Prompted Vision Language Model

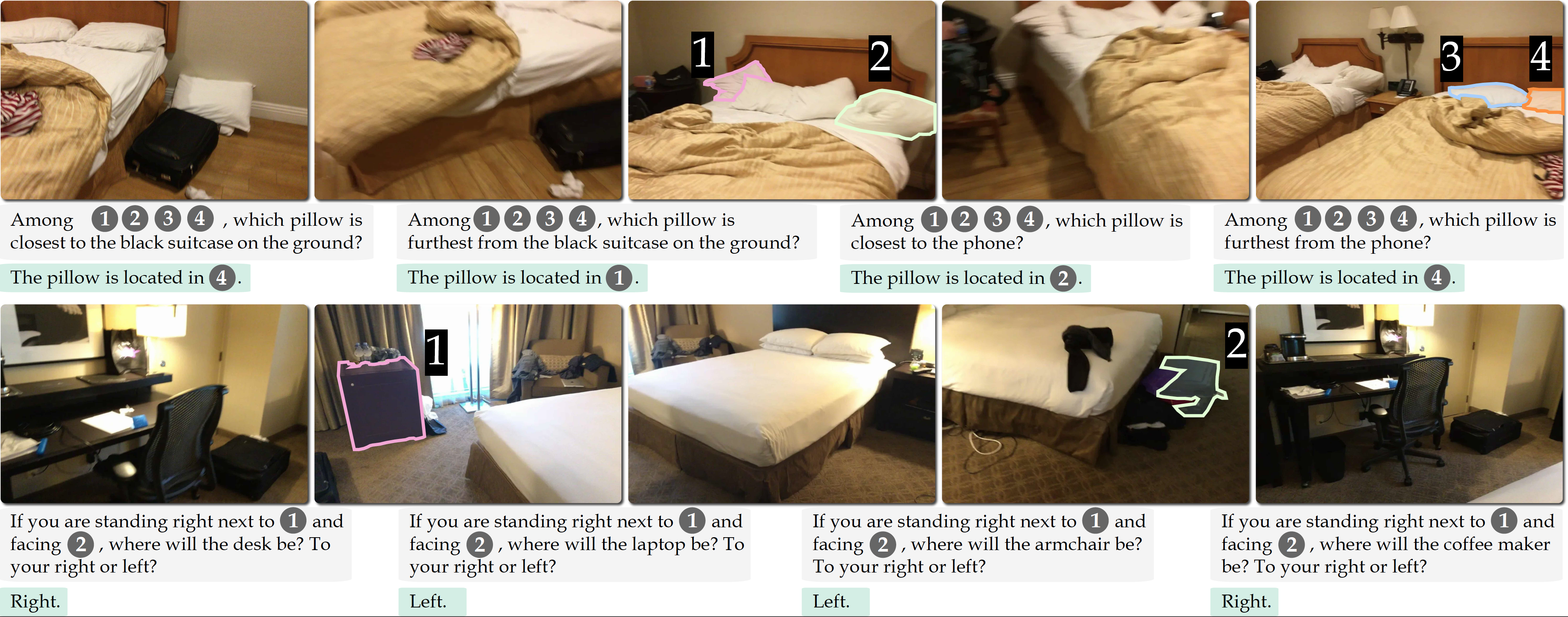

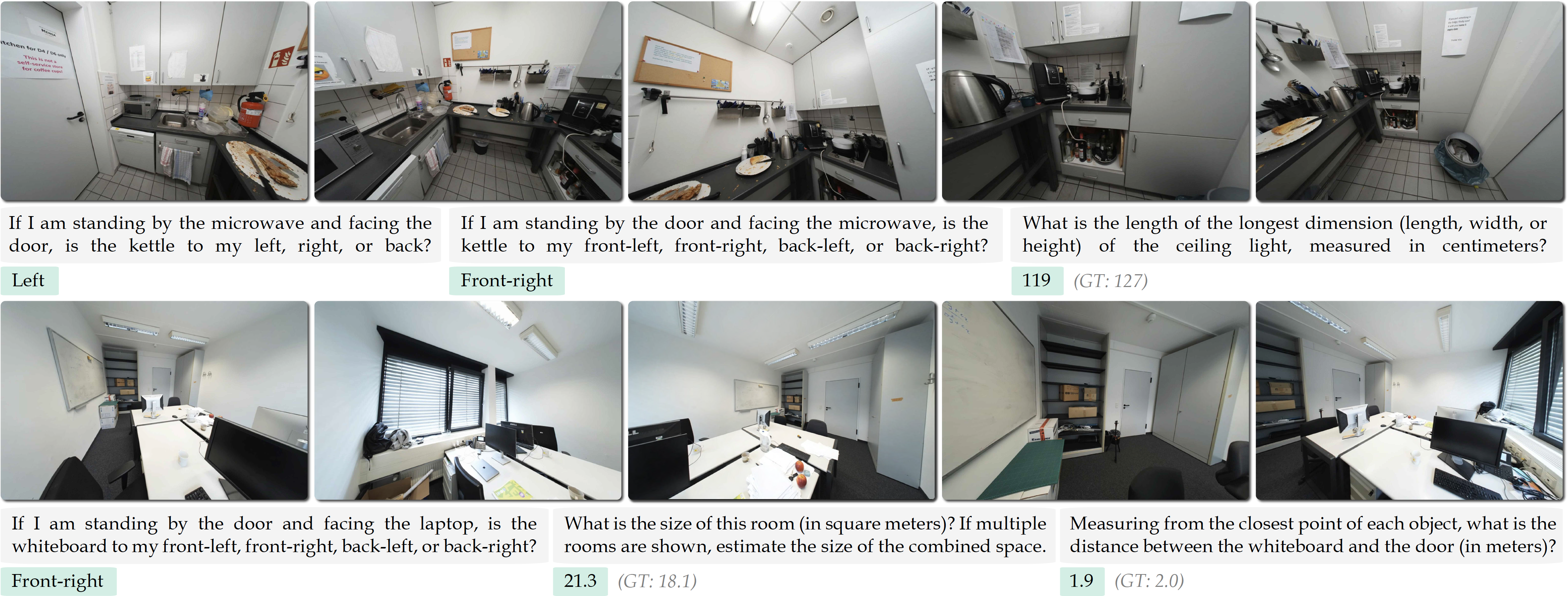

Region-level spatial reasoning for in-the-wild scenes without sensory 3D inputs.

ICLR 2026

Region-level spatial reasoning for in-the-wild scenes without sensory 3D inputs.

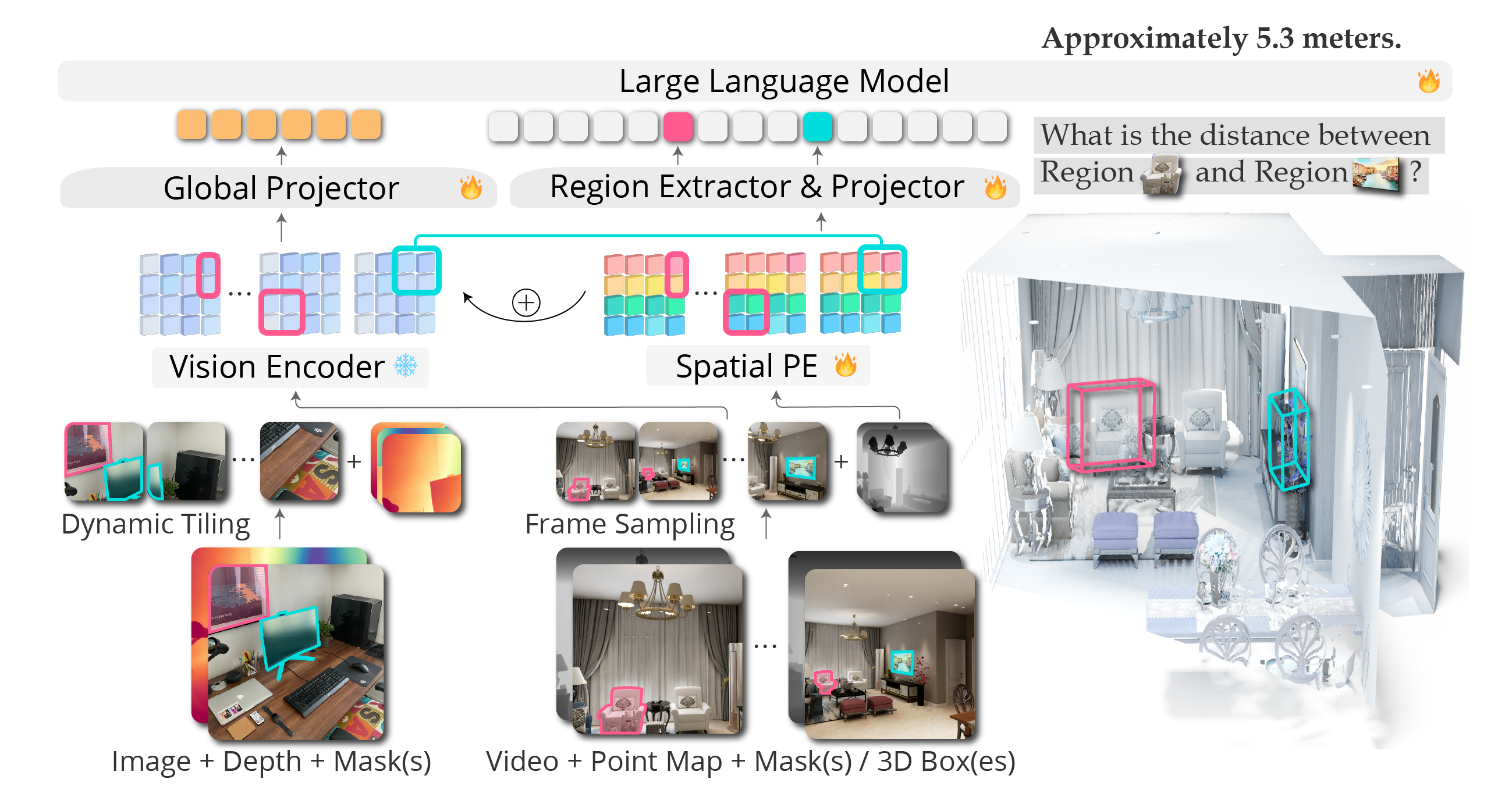

ICLR 2026A key idea of SR-3D is the introduction of a canonical positional representation shared across single-view and multi-view inputs. This unified representation enables large-scale single-view pretraining and supports the transfer of learned spatial priors to multi-view settings.

Incorporating 3D positional information improves spatial understanding in single-view models; comparing to the base model NVILA-Lite-8B, SR-3D achieves higher spatial performance.

@article{cheng2025sr3d,

title={3D Aware Region Prompted Vision Language Model},

author={An-Chieh Cheng and Yang Fu and Yukang Chen and Zhijian Liu and Xiaolong Li and Subhashree Radhakrishnan and Song Han and Yao Lu and Jan Kautz and Pavlo Molchanov and Hongxu Yin and Xiaolong Wang and Sifei Liu},

journal={arXiv preprint arXiv:2509.13317},

year={2025},

}